SD – Access – CCIE 1.0 Journey

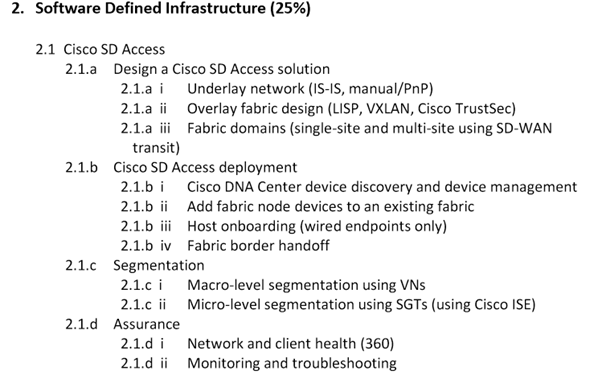

What is SD –Access?

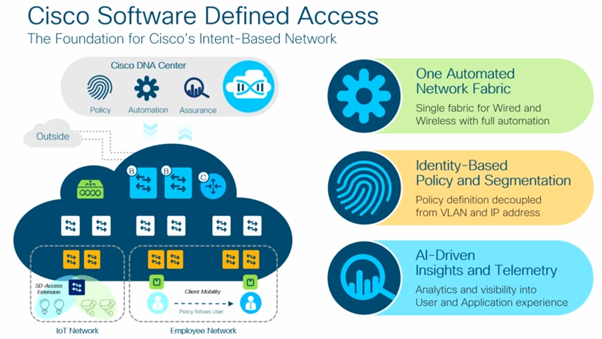

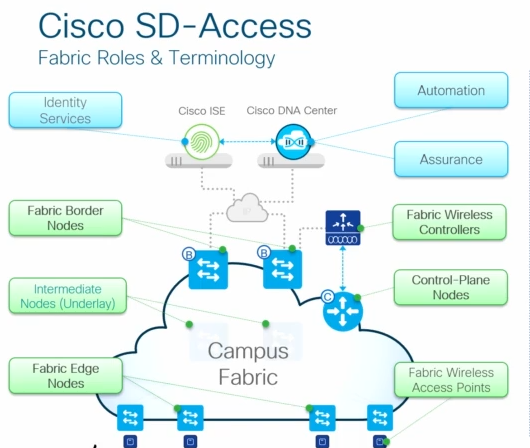

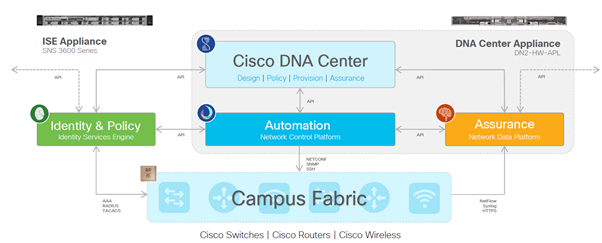

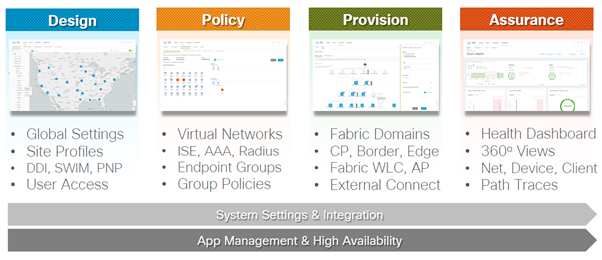

Combination of Campus Fabric + Cisco DNA center (Automation and Assurance)

GUI approach provides Automation and Assurance of all Fabric Configuration, Management and Group-based Policy.

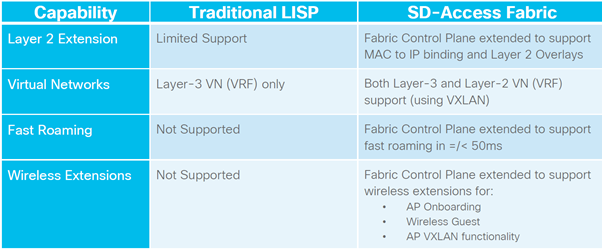

What is Campus Fabric – CLI or API Approach to build a LISP+VXLAN+CTS Fabric overlay for Enterprise Campus Network. ( CLI like any other cisco device box to box config, API like Automation using different models available in market like NETCONF/YANG.)

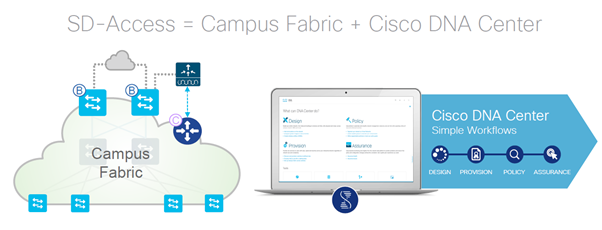

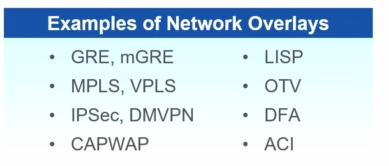

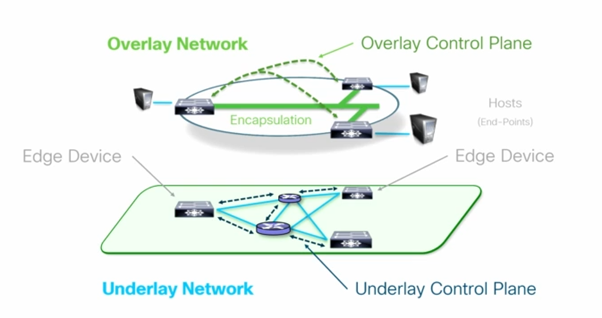

Fabric – is a overlay – logical topology used virtually connect devices built over physical underlay topology

Available models:

Overlay and Underlay

High level SD-Access Roles and Terminology.

| Network Automation | Simple GUI and API for intent-based automation of wired and wireless fabric devices |

| Network Assurance | Data Collection analyse end point to Application flow and Monitor Fabric device status |

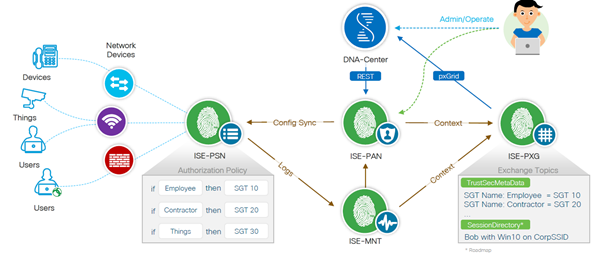

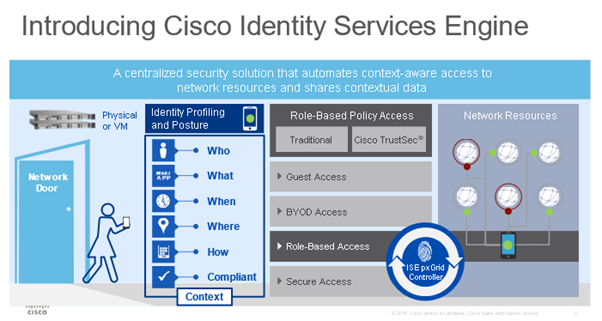

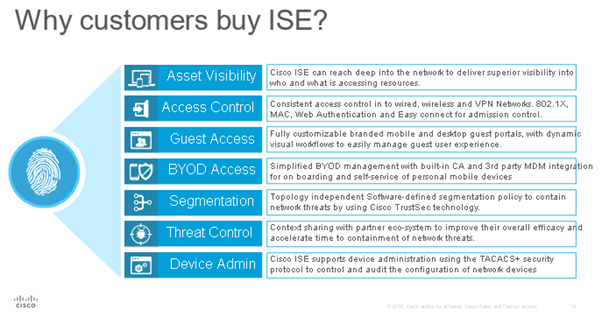

| Identity Services ( ISE) | NAC and ID Services for dynamic end points to Group mapping and Policy Definitions |

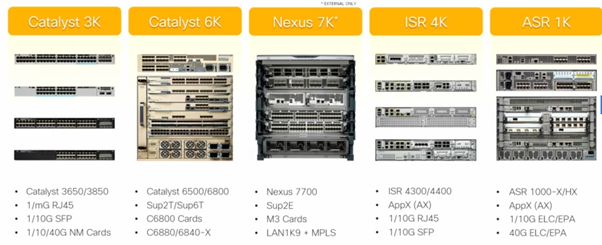

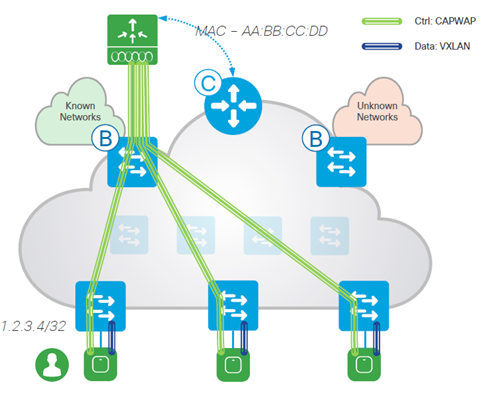

| Control-Plane Node (C) | Map system that Manages endpoint to device relationship |

| Fabric Border Nodes (B) | A Fabric Device (CORE) that connects external L3 networks to the SD-Access Fabric |

| Fabric Edge Nodes | A Fabric Edge device ( Access or Dist.) that connects Wired endpoints to the SD-Access Fabric |

| Fabric wireless Controller | A Fabric device (WLC) that connects to Fabric Aps and Wireless endpoints to the SD-Access Fabric |

Control-Plane Node – Runs a Host Tracking Database of map location information.

Control-Plan Node.

- Each fabric site can support up to six control plane nodes *

- For a wired only network we can support a maximum of six control plane nodes

- For a wireless only or wired + wireless network we can support a maximum of two control plane nodes.

- All the control planes nodes in a given state work in an active-active mode without any synchronization between them.

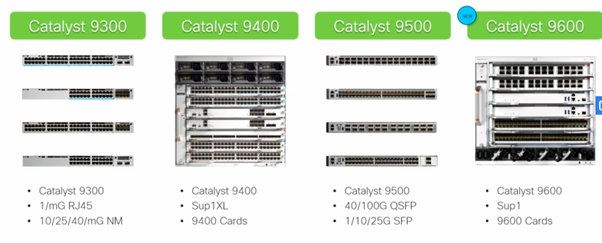

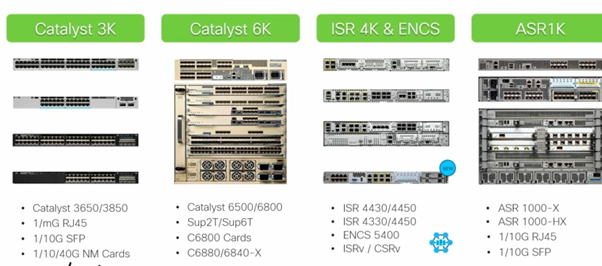

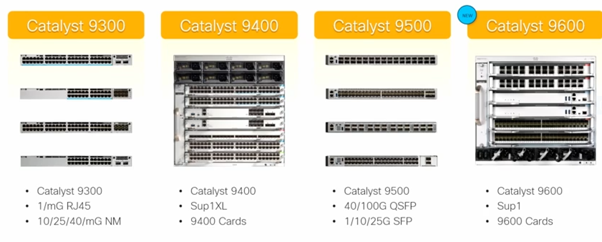

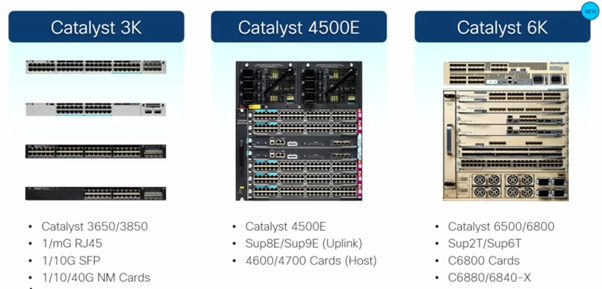

Control Plan Supported devices:

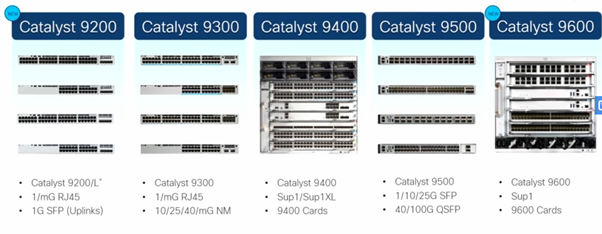

New Family of Cat 9K

Legacy support:

Fabric Border Nodes (B) – is an Entry & Exit point for data traffic going Into & Out of a Fabric

- Each fabric site supports a maximum of four Outside World/External Border nodes.•

- Each fabric site supports a maximum of four outside Anywhere/Internal+ External Border nodes.

- The above two borders are cumulative in a given fabric site.

EX: if we have two Outside World borders in a fabric site then we can only have two more Anywhere Borders.

- Each fabric site can support a maximum of hundred Rest of Company/Internal Border nodes.

There are 3 Types of Border Node

- Rest of Company/Internal Border Used for “Known” Routes inside your company

- Outside World/External Border Used for “Unknown” Routes outside your company

- Anywhere/External + Internal BorderUsed for “Known” and “UnKnown” Routes for your company

Rest of Company/Internal Border advertises Endpoints to outside, and known Subnets to inside

- Connects to any “known” IP subnets available from the outside network (e.g. DC, WLC, FW, etc.)

- Exports all internal IP Pools to outside (as aggregate), using a traditional IP routing protocol(s).

- Imports and registers (known) IP subnets from outside, into the Control-Plane Map System except the default route.-

- Hand-off requires mapping the context (VRF & SGT) from one domain to another.

Outside World/External Border is a “Gateway of Last Resort” for any unknown destinations

- Connects to any “unknown” IP subnets, outside of the network (e.g. Internet, Public Cloud)

- Exports all internal IP Pools outside (as aggregate) into traditional IP routing protocol(s).

- Does NOT import any routes! It is a “default” exit, if no entry is available in Control-Plane.

- Hand-off requires mapping the context (VRF & SGT) from one domain to another.

Anywhere/ Internal + External Border is a “One all exit point” for any known and unknown destinations

- Connects to any “unknown” IP subnets, outside of the network (e.g. Internet, Public Cloud) and “known” IP subnets available from the outside network (e.g. DC, WLC, FW, etc.)

- Imports and registers (known) IP subnets from outside, into the Control-Plane Map System except the default route.

- Exports all internal IP Pools outside (as aggregate) into traditional IP routing protocol(s).

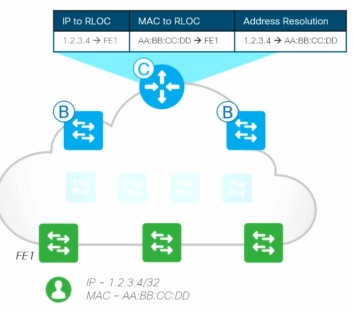

Support Devices:

*Nexus 7K only support – External only

Edge Node- provides first-hop services for Users / Devices connected to a Fabric

Every EDGE Switch has same IP address – that is Anycast IP address. Host Mobility will have advantage and gateway is same.

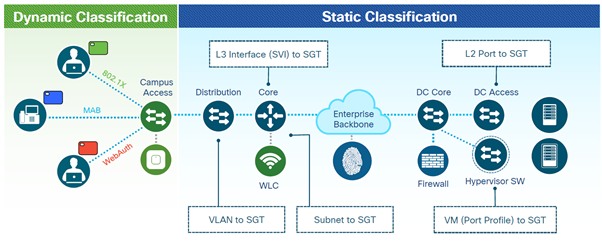

- Responsible for Identifying and Authenticating Endpoints (e.g. Static, 802.1X, Active Directory)

- Register specific Endpoint ID info (e.g. /32 or /128) with the Control-Plane Node(s)

- Provide an Anycast L3 Gateway for the connected Endpoints (same IP address on all Edge nodes)

- Performs encapsulation / de-encapsulation of data traffic to and from all connected Endpoints

- Each fabric site supports a maximum of500 edge nodes.

- Each fabric site supports a maximum of 500 IP subnets

Support devices :

*Cat 9200 support 4 VRF / Cat 9200-L support only 1 VRF

Fabric Wireless Controller –A Fabric device (WLC) that connects APs and Wireless Endpoints to the SDA Fabric

- Connects to Fabric via Border (Underlay)

- Fabric Enabled APs connect to the WLC (CAPWAP) using a dedicated Host Pool (Overlay)

- Fabric Enabled APs connect to the Edge via VXLAN

- Wireless Clients (SSIDs) use regular Host Pools for data traffic and policy (same as Wired)

- Fabric Enabled WLC registers Clients with the Control-Plane (as located on local Edge + AP)

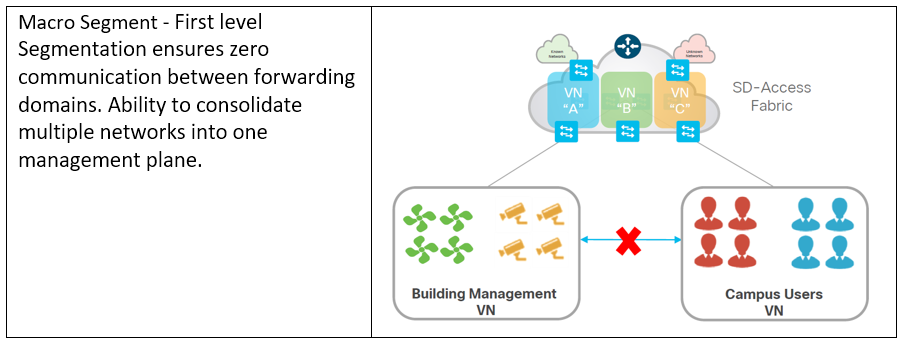

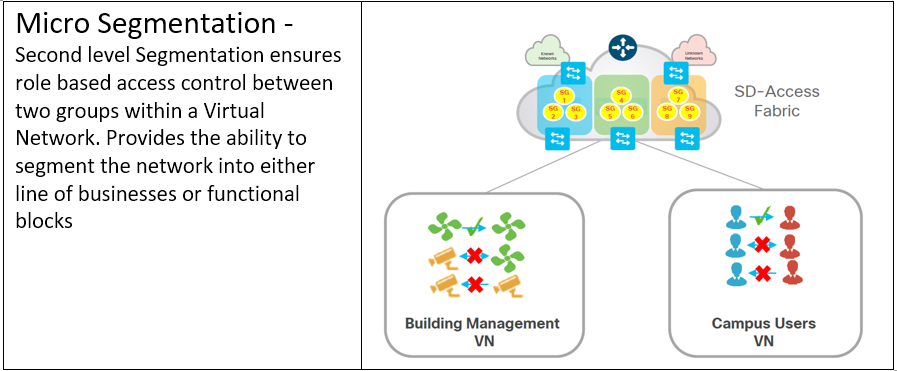

| Virtual Network | maintains a separate Routing & Switching table for each instance Control-Plane uses Instance ID to maintain separate VRF topologies (“Default” VRF is Instance ID “4098”)Nodes add a VNID to the Fabric encapsulationEndpoint ID prefixes (Host Pools) are routed and advertised within a Virtual NetworkUses standard “vrf definition” configuration, along with RD & RT for remote advertisement (Border Node) |

| Scalable Group | is a logical policy object to “group” Users and/or Devices Nodes use “Scalable Groups” to ID and assign a unique Scalable Group Tag (SGT) to EndpointsNodes add a SGT to the Fabric encapsulationSGTs are used to manage address-independent “Group-Based Policies”Edge or Border Nodes use SGT to enforce local Scalable Group ACLs (SGACLs) |

| Host Pool | provides basic IP functions necessary for attached Endpoints Edge Nodes use a Switch Virtual Interface (SVI), with IP Address /Mask, etc. per Host PoolFabric uses Dynamic EID mapping to advertise each Host Pool (per Instance ID)Fabric Dynamic EID allows Host-specific (/32, /128 or MAC) advertisement and mobility Host Pools can be assigned Dynamically (via Host Authentication) and/or Statically (per port) |

| Anycast GW | provides a single L3 Default Gateway for IP capable endpoints Similar principle and behavior to HSRP / VRRP with a shared “Virtual” IP and MAC address The same Switch Virtual Interface (SVI) is present on EVERY Edge with the SAME Virtual IP and MACControl-Plane with Fabric Dynamic EID mapping maintains the Host to Edge relationshipWhen a Host moves from Edge 1 to Edge 2, it does not need to change it’s Default Gateway |

| Stretched Subnets | allow an IP subnet to be “stretched” via the Overlay Host IP based traffic arrives on the local Fabric Edge (SVI) and is then transferred by the FabricFabric Dynamic EID mapping allows Host-specific (/32, /128, MAC) advertisement and mobilityHost 1 connected to Edge A can now use the same IP subnet to communicate with Host 2 on Edge BNo longer need a VLAN to connect Host 1 and 2 |

| Layer 2 Overlay | allows Non-IP endpoints to use Broadcast & L2 Multicast Similar principle and behavior as Virtual Private LAN Services (VPLS) P2MP OverlayUses a pre-built Multicast Underlay to setup a P2MP tunnel between all Fabric Nodes.L2 Broadcast and Multicast traffic will be distributed to all connected Fabric Nodes.Can be enabled for specific Host Pools that require L2 services (use Stretched Subnets for L3) NOTE: L3 Integrated Routing and Bridging (IRB) is not supported at this time. |

Campus Fabric -Key Components

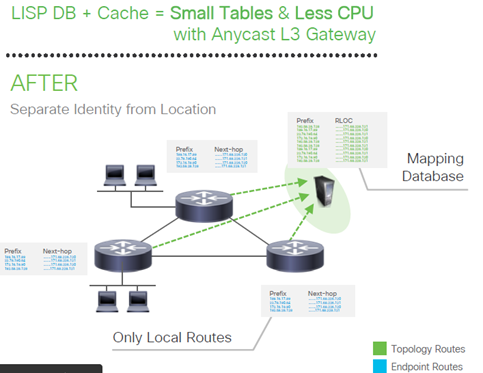

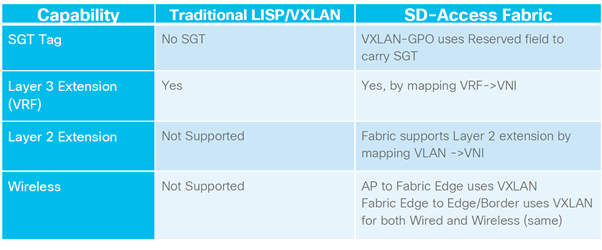

1.Control-Plane based on LISP

2.Data-Plane based on VXLAN

3.Policy-Plane based on CTS

Control-Plane based on LISP

2.Data-Plane based on VXLAN

Fabric Data-Plane provides the following:

•Underlay address advertisement & mapping

•Automatic tunnel setup (Virtual Tunnel End-Points)

•Frame encapsulation between Routing Locators

Support for LISP or VXLAN header format

•Nearly the same, with different fields & payload

•LISP header carries IP payload (IP in IP)

•VXLAN header carries MAC payload (MAC in IP)

Triggered by LISP Control-Plane events

•ARP or NDP Learning on L3 Gateways

•Map-Reply or Cache on Routing Locators

3.Policy-Plane based on CTS

What is Cisco DNA Center?

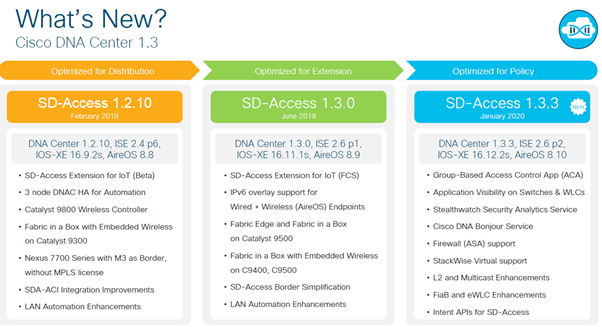

Cisco DNA Center, Release 2.1.2.x released – but i have limited information whats new.

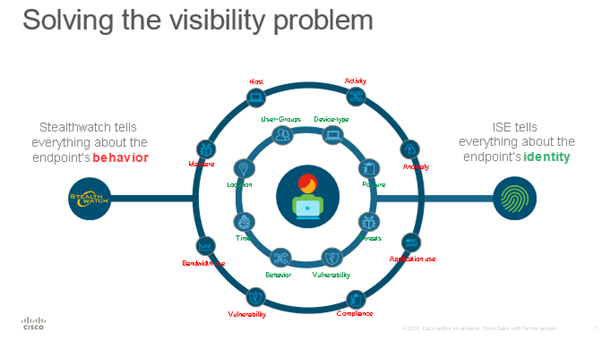

ISE Role in SDA

Stealthwatch

More Labs coming soon……… happy Labbbing!!!!!